A tour to virtual memory

Winter 2022 has finished and I have finished my Operating System course. Apparently, I did not take proper notes as I promised. However, I am now a beginner to kernel exploitation by taking this course. To compensate, I decided to write a beginner-friendly post on basic virtual memory.

RAM from the physical world

Let’s start with this actual ram in the real world. You can see there are pins on this electronic. RAM, as the name suggests, is a piece of (physical) memory that stores data. It is volatile, meaning the data will be lost if power is off.

How do we use this if we’re building a PC from scratch? Or how do we store or read data from this thing using electricity?

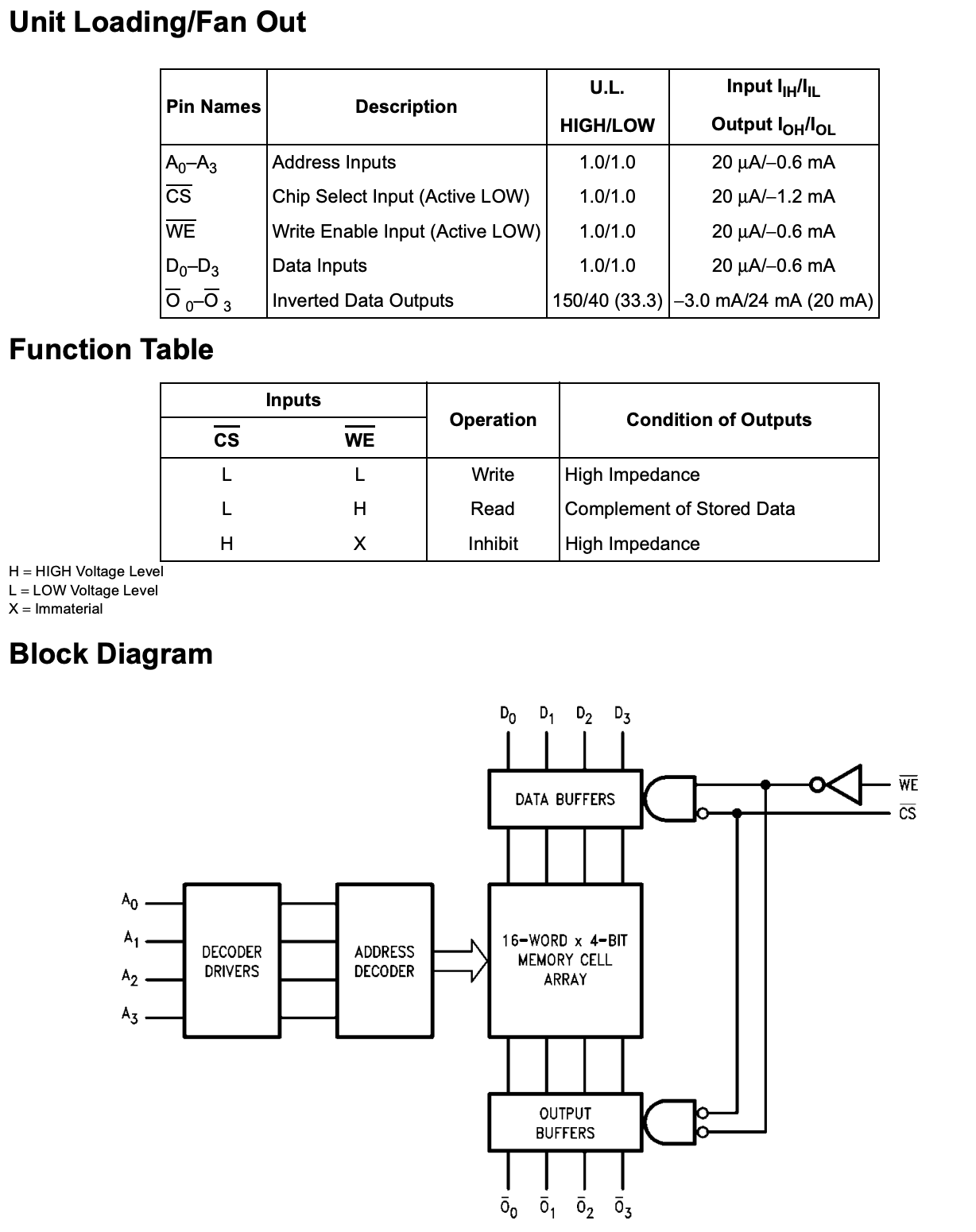

To write a value into an address, you apparently need address and value. Those correspond to A pins and D pins. To read from an address, you need to provide an address using A pins and read from O pins. One last function of this electronic is to switch functions between reading and writing, and it is done by the WE and CS pins.

You can see this electronic has pow(2, 4) addresses, and each address has a 4-bit value. So the total data it can contain is 32 bytes.

How do we utilize the memory as the kernel?

So, we have a piece of memory and we’re good to write an OS on top of that. An OS runs processes, and processes need memory. Think about it, how should we ration these memory to different processes?

Linearly distributed memory

A dumb but straightforward way is to split the whole memory space into blocks in the diagram. The layout is simplified but illustrates the idea.

There are a few obvious drawbacks to this simple implementation though.

- Process memory isolation

- Compilation needs to adapt (as a process may be loaded in different addresses, they cannot use absolute addresses)

- Inefficient use of memory

Despite the first two problems, the third is the most important one because it is related to money. Think about this, if you have to design an algorithm to utilize the memory most efficiently, what would you do?

Maybe you would say, let’s just don’t give boundaries to each process.

In this diagram, P2 has a larger memory available than the first implementation. That partially resolves the problem

But this is still a flawed design. For example, a process cannot expand its memory if it needs more. Another thing is that if P1 is terminated and another process bigger than P1 is waiting to be spawned, it is not possible to do so.

Paging

So far, I think you might already have a solution for this and it is indeed very intuitive: we can split the whole memory into chunks (pages), and we fool the processes that they have continuous memory, yet in the physical world they are not.

To do so, we must need some help from the hardware, and we need to store the data for real-address lookup. Throw all your past experiences with addresses, and let’s invent a memory management scheme from scratch.

Page table

The data structure we used to store look-up info is called “page table”. The page table is a key-value dict, with the key being the page/frame number and the value being the real physical address. Since the keys of page number are continuous, it is essentially a “page list”.

To do the lookup, we need to agree on a few things first:

- 32-bit address

- Page size is 0x1000

- The first 20 bits are for page/frame number

- The page table is at the physical address of 0x0.

Notice that the settings here are entirely arbitrary.

Suppose the hardware wants to find the physical address for virtual address 0x1337babe. From the settings given, the frame number is 0x1337b and the offset is 0xabe. So the real physical page we want to find is located in the 0x1337b-th entry of the page table. Suppose page_table[0x1337b] = 0xdeadb000, then the physical address of 0x1337babe is at 0xdeadb000 + 0xabe.

How are pages get mapped?

When a process is spawned, it needs memory to load its code. We give a page to it and map the physical and virtual addresses. Let’s say our program wants 0x1337babe to be its entry point, so we allocate a physical page (suppose 0xdeadb000) and mmap the frame number 0x1337b to it.

This design looks shitty still: what if another process with entry point 0x1337babf needs to be loaded into the memory? So we need to separate the page tables for different processes. For example, we set the page table for the first process to be at 0x0 (physical), the second to be at 0x512 (physical), and the third at 0x512 beyond the second one…

Doesn’t page table waste space?

Absolutely. Let’s do the math here: for a 32-bit address, it has 2 ^ 32 possibilities. If page size is 2 ^ 12, then there will be (2 ^ 32)/(2 ^ 12) which is 2 ^ 20 pages. Each entry in the page table needs 32-bits and there are a max of 2 ^ 20 entries possible. Therefore, one page table for a process needs 4MB.

This is already very expensive. If you have 100 processes running, that costs 400 MB of RAM.

2-level page table

Recall from the arbitrary agreements we have above, we now split a virtual address into three parts: [10 bits][10 bits][12 bits]. Let’s call the first 10 bits level-1-page index and the second level-2-page index.

Here’s the new 0x1337babe lookup procedure:

- Get the page table address. (let’s say 0x12340000)

- Find the level-1-page index. The first 10 bits are 76. We find the 76-th entry from 0x12340000, which is *(0x12340000 + 76 * 4). (let’s say 0x43210000).

- Find the level-2-page index. The second 10 bits are 891. We find the 891-th entry from 0x43210000, which is *(0x43210000 + 891 * 4). (let’s say 0xdeadb000)

- Add the offset to the result 0xdeadb000

Math again

How much space do we need this time? For a level-1-page table, there are 10 bits, so 2 ^ 10 possible entries. We need 4 * 2 ^ 10 which is 4096 bytes. For a level-2-page table, we also need 4096 bytes. So if a process only uses one page, it only needs one level-1-page table and one level-2-page table. So we need 8192 bytes only. The page table size increases, but we get the minimum possible when we don’t need that much. That saves memory a lot.

Switch to real-world again

In an amd64 CPU, there exists a register called cr3, and that stores our page table base address. Let’s go back to the example above. When a process is continued, the kernel sets the cr3 register to 0x12340000. Suppose we have an instruction mov [0x1337babe], 0. The CPU wants to know the physical address it needs to write to, so it goes over the process we did before *(*(cr3 + 76 * 4) + 891 * 4) + 0xabe.

Notice that Linux uses a 4-level page table (or 5, depending on build options) instead of the 2-level example we have here.

What does this empower us?

Let’s go back to the three problems we encountered earlier.

- Efficient use of memory

This is a better algorithm for sure, compared to the ones we invented before. Although it needs some extra space to store paging information, it overall is a very good tradeoff.

- Compilation needs to adapt

Surely it does not anymore. Two processes can have two exactly the same virtual addresses.

- Process isolation

Since each process has its own page table, they do not share the same physical memory anymore. One more thing is that each physical page is only owned by the correct processes, as they are only mapped to the page table of that process but not others'.

Some finishing words

- I did not mention permissions because they are simple to understand

- I did not mention TLB, as they are more hardware optimization related

- I did not mention page fault, since I think it is too advanced to be in an intro post

- I strongly recommend you to go through this lab

- I strongly recommend you to go over this project. It is a tool to see the page mapping of qemu-system.